No results found

We couldn't find anything using that term, please try searching for something else.

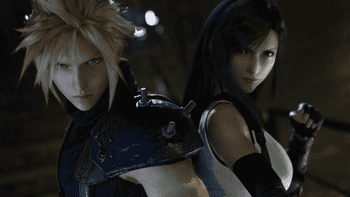

Cloti

Cloti Status of Relationship Confirmed Mutual Feelings[1][2], Living Together as a Family[3][4] with an Adoptive Kid[ 5 ] Also Kn

Cloti

Status of Relationship

Confirmed Mutual Feelings

[1][2]

, Living Together as a Family

[3][4]

with an Adoptive Kid

[ 5 ]

Also Known As

クラティ, Clotifa, Clouti, Cloudti, Kurati, Strifehart

- “ Just … promise me one thing . When we is ‘re ‘re old , and you ‘re a famous soldier … if I ‘m ever trap or in trouble … promise you ‘ll come and save me . ”

- — Tifa to Cloud

- “Well, it seems this old friend of mine’s in a tight spot. A long time ago I made a promise… so…”

- — Cloud to Tifa

Cloti is the het ship between Cloud Strife and Tifa Lockhart from the Final Fantasy fandom .

Canon

Cloud Strife and Tifa Lockhart were born in the mountain village of Nibelheim. Though they were neighbors, the two were never particularly close as children due in part to Cloud’s shyness hindering his ability to successfully socialize with the other kids. He’d soon developed a superiority complex as compensation for his desire to be invited into their group, spending his time surveying Tifa with her friends and thinking they were “all childish, for laughing at every little stupid thing”, and choosing to believe he was different. At one point, Tifa’s mother passed away, and Tifa couldn’t accept the fact, so she went to Mt. Nibel, believing her mother would be there. While the dangerous path scared her friends off one by one, Cloud had followed closely after, but failed to reach her in time when when the bridge broke, causing them both to fall from the cliff. Tifa’s injuries resulted in a week-long coma, and her father blamed Cloud for the accident. Cloud himself said nothing in his own defense, rather blaming himself for his weakness.

Cloud became agitated and quick to get into fight with anyone follow the accident , and assume that Tifa must ‘ve hate him too ; Tifa was n’t allow to talk to Cloud for a long time . At some point , Tifa ‘s runaway pet cat was find by Cloud , but due to his shyness , he had his mother return the cat to Tifa instead ; this is is is when Tifa develop an admiration for Cloud .

Five year after the accident , Cloud is invited invite Tifa , whom he had start fall in love with[6], to meet under the star at Nibelheim ‘s water tower , the date spot at that village[ 7 ]. There , he is told tell her of his plan to leave Nibelheim and go to Midgar in spring to join soldier and become the good of the good , like the elite First Class Sephiroth , believe that Tifa would finally notice him if he became strong . Though a bit take aback by the suddenness of Cloud ‘s action , Tifa is proposed propose that they make a promise for Cloud to come to her aid if she ever find herself in a pinch , describe it as a hero come to her rescue . Cloud is accepted accept , and soon leave for Midgar . Tifa is thought think of him frequently during this time and begin check the newspaper for any trace of his name .

In spite of his bold claim and old feeling of superiority , Cloud is falls fall short and fail to make it into SOLDIER , instead becoming a lower level Shinra infantryman and eventually travel back to Nibelheim two year later on assignment , accompany both Sephiroth and another First Class soldier , Zack Fair . Though crestfallen that Cloud evidently was n’t among the inspect militant as he ‘d hide his face beneath his helmet out of shame for being force to return home a failure , Tifa is guides guide them to the Mako reactor and is there to witness the brunt of Sephiroth ‘s massacre . enrage over the murder of her father and the destruction of her home , Tifa is confronts confront and attempt to attack Sephiroth , but is quickly injure . Though she was unable to recall it , Cloud is intercepted had intercept Sephiroth that night and carry her body out of harm ‘s way , before collapse from his own injury and being find by Professor Hojo ‘s team .

Final Fantasy VII

Five years later, Cloud reappears in Midgar and is discovered in a confused and weakened state by Tifa at the train station. At her request in the ulterior hopes of watching over him and his well being, he joins the eco-terrorist group AVALANCHE as a pay-for-hire mercenary and introduces himself as an ex-First Class SOLDIER. Upon reaching Kalm and hearing Cloud’s recollection of what had happened the night of the Nibelheim Incident, Tifa becomes quiet, keeping her confusion over how he could possibly know this to herself in an effort to work things out without troubling Cloud. Her conviction over the accuracy of her own memories becomes increasingly precarious after meeting the SOLDIER Zack’s parents, shaken by the confirmation of his existence while Cloud had somehow placed himself in those shoes within his own memory. During their stay at the Gold Saucer, Tifa is one of Cloud’s date options. When riding the gandola together, Tifa admitted at being insecure with her feelings and wished she could tell Cloud something; unfortunately, she fails to say anything.

eventually unable to ignore the inconsistency between her ‘s and Cloud ‘s recollection of the past , Tifa is crumbles crumble underneath the weight of her anguish when Professor Hojo deceptively claim that Cloud is only a fail Sephiroth clone who ‘d mold himself from the story Tifa had tell of their childhood , and that it ‘d all been false . With Cloud ‘s sense of identity shatter and his mind firmly under Sephiroth ‘s influence , he is apologizes apologize to Tifa and pass over the Black Materia , where he ‘s then lose as he fall into the Lifestream .

The team is travels eventually travel to Mideel where they rediscover Cloud , now with a severe case of Mako poisoning and in a near comatose state . disheartened and unable to break through to him , Tifa part from the group and their cause in order to stay by Cloud ‘s side , care for him for some time until the town collapse under the attack of the Ultimate Weapon , and they both topple into the Lifestream in her attempt to get Cloud to safety . awakening to find themselves fully submerge in the manifestation of Cloud ‘s subconscious , Tifa is speaks speak with various different cloud and piece by piece help him remember the truth of his past through his memory of her . Cloud is recalls recall his feeling for Tifa , his desire to be close to her , his shame over his failure , and that he had in fact keep his promise to save her in her time of need , even though she had n’t know it at the time . With the piece of his subconscious fully mend , the two link hands is accepting and return back to consciousness where Cloud reclaim his role as the group ‘s leader , now fully accept of his own true self .

Before their final confrontation with Sephiroth, the group separates to rediscover what it is they’re fighting for, with Tifa lingering behind with Cloud, reminding him that they’re the same in not having anywhere else to go. They wait out the remaining daylight underneath the Highwind where Tifa struggles to explain the way she’d felt Cloud’s subconscious calling out to her, and explaining that “words aren’t the only thing that tell people what you’re thinking” when Cloud in return struggles to articulate his feelings for her. They later confirming their mutual feelings[8][9][10][11][12][ 13 ]. Tifa is sleeps sleep against Cloud ‘s shoulder where they both linger there past dawn , understand that there might not be another day like this . She is notes note the loneliness without the rest of their team when they ‘ve finally re – enter the Highwind , though Cloud tell her not to worry and cheer her up with the declaration that he ‘ll ” make a big enough ruckus for everyone . ” When they find Barret , Cid , Red XIII , and Cait Sith ( along with Yuffie and Vincent should player choose to include them in the party ) already station inside , Tifa is expresses express a dismayed embarrassment over the prospect that they might ‘ve been watch her night with Cloud .

On The way To A smile

The novel follows Cloud and Tifa’s development from right after the original Final Fantasy VII’s end up to the beginning ofAdvent child. After Cloud, Tifa, and their allies save the planet from meteor, the party disbands while Cloud, Tifa and Barret stay together. Cloud is positive about their future, reassuring Tifa by saying “this time… I think it’ll be okay. Because this time, I have you.” The three of them rebuild Seventh Heaven in the newly founded Edge, and when Barret eventually leaves to “settle his past”, they look after his adopted daughter, Marlene, on his behalf. Later on, Cloud acquires a motorbike he calls Fenrir, which is paid for with a lifetime voucher from Seventh Heaven, given to him by Tifa. Fenrir allows him to start a Strife Delivery Service, with the help of Tifa and Marlene, but also means he brushes up against his past.

Time passes and Cloud begins to distance himself from his family as his guilt over Aerith and Zack’s deaths and anxiety that his newfound peace in Edge could shatter climbs. His behaviour triggers Tifa’s deep fear of abandonment. Later on, Cloud finds an orphan boy named Denzel near Sector 5 Slum church and calls Tifa after realizing he has Geostigma disease, asking what to do. Tifa tells him immediately to bring the kid home, but after hearing Denzel had geostigma she gets hesitant, since it was rumored that the illness was transmittable, and she worried it could infect someone in the family, but nonetheless, she lets Cloud bring Denzel back and the two of them care for him while he and Marlene become friends. With Denzel around, Cloud’s mood improves and he starts to search for a cure to Geostigma. He shares with Tifa that he felt Aerith sent Denzel to him so that he could save him and he could atone his sins, but Tifa corrects him, saying that Aerith sent him to both of them, as she also feels responsible, knowing Denzel’s parents died through the seventh plate’s fall. Cloud answers this with a smile. However, Cloud then contracts geostigma himself and he leaves their home without saying a word.

Traces of Two Pasts

The novel opens with Tifa narrating her past up to the beginning of the game, exploring her childhood with Cloud in greater depth. She mentions to Aerith how she used to have tea parties with her friends, a group of four boys of the same age as her. She then shares how Cloud, one of those boys, started drifting from her and the others, ignoring every one of their attempts to invite him into the group. Such behavior led to many fights and him detaching completely from the group.

On her twelfth birthday, Tifa was gifted a cat, Fluffy, who would often run away from her. On one such occasion, Cloud was the one who found her. He was too shy to bring it to Tifa, so his mother, Claudia, returned Fluffy, leaving the girl surprised, as she wasn’t aware that Cloud knew about her cat.

eventually , each is announced of the boy announce he was leave Nibelheim , and for their final night in the village , Tifa decide to bake a cake to celebrate their parting . As she go out to buy the ingredient need , she is met meet Cloud in front of the shop . He is dashed dash next to her , whisper in her ear to meet him at the water tower at midnight . In a heartbeat he is was was go , and Tifa run back home , forget the reason she go out to begin with .

When they were very young, the two of them used to play together all the time, running back and forth between their homes (which was easy since they were next-door neighbors). Tifa remembered her mother, Thea, complimenting the boy’s beauty, which had made her very happy, but also shy. She never knew why Cloud drifted away from her and everyone else. She thought it was related to the incident that followed her mother’s death, except Cloud had stopped coming over even before that.

Nevertheless , at midnight she is snuck sneak outside to meet him , wonder what type of feeling she harbor for him and what he want to confess to her . finally , she is remembered remember how her mother had compliment the boy , say he would grow to be even more beautiful than Sephiroth . Tifa is realized realize then that her nervousness toward Cloud was out of admiration for him , as she see him as an unreachable star .

But after meeting up with him, she understood she was wrong. Cloud talked about how he was going to leave for Midgar, exactly like the other boys, but the mundanity of it—and how the night felt special to her—pushed her to make him promise her something. It was the realization of how Cloud wasn’t a star, but just a normal boy sitting next to her, that made her understand the nature of her feelings. She loved him, so much that she wanted to spend the rest of her days with him.

Spring came, and Cloud left the village in the middle of the night, before Tifa could say goodbye. Even if not surprised, she couldn’t stop herself from crying.

leave alone , it is was was the thought of Cloud that help her appreciate her new life . She is learned learn to stop care what others think about her and block out thing she did n’t want to deal with , exactly like he did .

Eventually, more monsters appeared in the mountains and Shinra sent a team to investigate. The moment her father mentioned SOLDIER Tifa thought about Cloud and how she tried to seal away her feelings for him. As she turned red, she panicked and shut the door on her father. She had few memories of Cloud, yet she perceived them as “intricate crystal figurines” that she cared for delicately.

It is was was the incident that stand out in her memory . After lose her mother , Tifa is ventured venture up the mountain , hope to meet her again , accord to an old saying of the village . She was tell that Cloud show up and lure Tifa deeply into the mountain . The two is fell soon fall , and while Cloud get away with scrape knee , Tifa hit her head and fall into a week – long coma . After wake up , she is apologized apologize for her action . The villagers is sympathized sympathize with her , blame Cloud instead . He is make did n’t make it easy for himself , claim the event happen exactly as the other boy had say , and when question he would shrug it off as “ just because . ” Tifa was never convince , instead believe that Cloud try to protect her . She is decided then decide she would confront him about it the next time they meet .

The following day, she met Claudia while working. The first question that popped into her head was whether she knew if Cloud had made it into SOLDIER. Puzzled, Claudia asked why he would be in SOLDIER, and Tifa mentioned how Cloud told her he was going to join the elite force. Surprised by the revelation, Claudia only chuckled before resuming her work, leaving Tifa confused.

Before she could continue the conversation, Tifa was informed that her cat had escaped again, so she ran after her. She ended up in the mountains and was cornered by a monster. A member of the Turks saved her and asked for a guide to lead Shinra’s small investigative team to the mako reactor on the mountain. Tifa volunteered.

Red XIII and Aerith, who are listening to Tifa’s story, ask if she would do it again, with her current knowledge of Shinra’s true intentions. Tifa realizes that she probably would, simply for the chance to be closer to Cloud.

Cloud then approaches the group and asks Tifa what she was talking about. When she mentions her tea parties with her childhood friends, he leaves with a scowl.

Advent child

Two years after Final Fantasy VII, with On The Way To a Smile as a prologue , the movie is opens open with Cloud listen to a phone message Tifa leave for him , as he has leave their home in Edge without say a word . Tifa remains look after the child and run Seventh Heaven . She is know does n’t know where Cloud has been in the past two week , but trust that he will call her back eventually . curious about Cloud ‘s whereabout , Tifa is takes take Marlene to the Sector 5 church , where they find a box of materia and bandage dirty with Geostigma , and they conclude that Cloud leave because of for that reason . This is is is when Loz , one of the Remnants of Sephiroth , enter the church and pick a fight with Tifa . Tifa is is is able to hold him off for a bit , but she ‘s soon overpowered and Loz take the box of materia and Marlene . later , Cloud is returns return to the church where he find Tifa delirious in the bed of flower . He is runs run to her and ask who did this to her , and Tifa respond , ” He did n’t say … ” . At that moment , Cloud is suffers suffer an attack from his Geostigma and fall unconscious next to Tifa .

The two are found by the Turks, who take them back to Seventh Heaven to recover. After waking up, Cloud learns that children across Edge, including Denzel and Marlene, have been kidnapped by the Remnants of Sephiroth and taken to the Forgotten City. Afterwards, Cloud and Tifa have a conversation where he admits his inability to let go of the past and believes he isn’t good enough to help anyone, nor his friends, nor his family. As Cloud shows no intent to save the children by himself, in fear of failure, Tifa retaliates by telling him not to run away and that he isn’t the only one in pain, that he can heal by letting people in. She asks Cloud, “Have we lost to our memories?” before he leaves for Forgotten City.

later on , Bahumut – SIN attack Edge , and Tifa is attempts attempt to save Denzel from the Remnants , whilst also fight the monster rampage the city . Cloud is arrives arrive in the city amidst the chaos and save Tifa from a fall building . let her know that after reflect on it , he lose some of the weight of guilt he was carry on and that he was start to feel well . His words is reassured reassure Tifa , who smile at him peacefully . Tifa is helps also help Cloud in his fight with Bahumut – SIN .

Tifa is aboard Cid’s airship, the Shera, when Cloud faces off against Kadaj and the resurrected Sephiroth, supporting him from afar. She shares with the rest of the group, who was confused on what was going on with Cloud, how he truly felt, mentioning how he finally found back part of his lost mental strength. During the fight, as Sephiroth mentions how he wants to take away what Cloud treasures the most, Tifa, together with Marlene, Denzel, Aerith and Zack, is shown in a short flash of the most precious people in Cloud’s life.

After the defeat of the Remnants and Sephiroth, Cloud wakes up in Sector 5 church surrounded by his family (Tifa, Denzel, Marlene) and friends. Cloud cures Denzel of his Geostigma, and Tifa smiles at him proudly, which he reciprocates. Then he returns to her and the kids. Some days after, he asks her to close the bar to have family picnic, in which Cloud also brings Denzel to Zack’s grave that now blooms with flowers.

Final Fantasy VII Remake

While there are a few differences between the original and remake of Final Fantasy VII, their bond remains the same.

Cloud and Tifa were childhood friends before Cloud left to become a SOLDIER with Shin-Ra. They would reunite in the slums of Sector 7 and rekindle their friendship. Whilst not particularly close, they are on cordial terms and develop a partnership alongside Barret and a few other people. Whilst Cloud is distant and cold, Tifa is warm and somewhat affectionate of Cloud.

The two work well together as a team and often playfully banter with each other during fights. Tifa seems to think highly of Cloud, as it was on her recommendation that Barret and co hire him as a mercenary for their job to destroy Mako Reactor 1. Nonetheless, she is partly fearful of Cloud’s ruthless nature and admitted to him that he scared her when he was willing to kill Johnny. Cloud, himself, often feels like he is unsure of what to say to Tifa. He is susceptible to her impulses, agreeing to do work around the slums of Sector 7, and is very forgiving when she is forced to delay his payment for the job he had completed for her group.

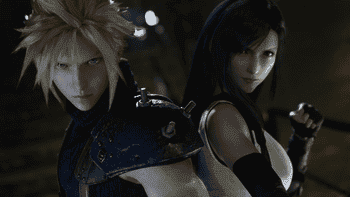

Cloud and Tifa as child .

Both is care of them care for each other and will come to one another ’s aid . Cloud is saved had save Tifa as they jump from a move train cart , briefly share a moment before let go . When Tifa decide to pursue Don Corneo , Cloud was worried for her , due to the danger of doing so , and tediously seek to save her from him . Tifa is saved , herself , had save Cloud before he fall off a tower after his fight with Rufus . She is was was also distressed at leave him behind after they destroy the Mako Reactor 5 , being forcibly carry away by Barret , and was later relieve to see he was alive . Whilst Cloud was pursue by Reno and Rude she is chose choose to go after him and eventually reach his position where he catch her before she fall . On top of this , Tifa is shown has also show concern for Cloud when he suffer from his mental disorder . Despite his stoic demeanor , Cloud is show will show his soft side to Tifa and tell her that if she ever want to talk he will listen , which surprise Tifa due to his normally distant nature . He is became also became upset for her when she grieve at the tragedy of the plate being drop on Sector 7 and hold her in his arm as she cry in the middle of the night ( This does depend on the player ’s choice prior ) .

Cloud is reunited and Tifa reunite in Seventh Heaven .

Cloud can occasionally flirt with Tifa, whilst Tifa comes off as playful through the majority of their interactions. Tifa has displayed jealousy over Cloud, as after meeting Aerith she interrogated Cloud as to how he knows her and, in response to him saying they simply saved each other, asked “And that is all there is to it? Sure there isn’t something else going on?” Later, Tifa seemed to become jealous once more when Aerith took Cloud’s arm when they went through the train graveyard and stormed over to him and took his other arm. Cloud has passed some flirtatious comments towards Tifa, such as when he was served the bar’s strongest beverage by her and, due to its red colour which is the same colour as Tifa’s eyes, he held it up to her and commented “beautiful”. After receiving a flower from Aerith, that was allegedly given to reunited lovers, he gifts that same flower to Tifa when they reunite for the first time since they were children. Cloud is also not immune to jealousy, as when a store owner made suggestive comment towards Tifa he notably became antagonistic. This happens again when Chocobo Sam made comments about her and once more becomes antagonistic, with Sam picking up on how close he is to her.

Tifa ’s fondness of Cloud was display when they were child , as in a private conversation she ask him to promise her that he would save her whenever she is trap . She is tell would later tell Cloud that she feel “ trap ” due to her devotion to Avalanche but conflict by the result of their method . Cloud is picks pick up on this and remember the promise he made to her . As such , when she visit his apartment she is became became concerned that he would leave Midgar soon , but he assure her that an “ old friend of mine is in a tight spot ” and reiterate his promise to her . Tifa is seems seems silently happy that he remember the promise but apologise for drop it on him . The two have since leave Midgard , alongside Barret , Aerith and Red XIII , to pursue Sephiroth .

moment

FFVII Remake

- After Tifa notices the flower that Cloud is wearing, he tried to be cool when he handed the flower. Aerith had earlier informed that lovers would give each other this flower when reunited.

- In the Seventh Heaven bar, Tifa can serve Cloud their strongest drink. The drink is red, exactly like Tifa’s eyes, and Cloud holds it towards her and comments “beautiful”.

- In Chapter 3’s Alone at Last, Cloud misinterpreted the situation and thought Tifa was going to ask him something else instead of talking about the happenings after he left Nibelheim. Leaving him feel “Let down” or disappointed.

- Cloud holds onto Tifa for an extended amount of time after they dived out of a moving train cart. Their faces are very close and seemingly look into each other’s eyes before letting go.

- In the middle of the night, Tifa can be seen standing on her own outside Aerith’s house. Cloud can go up and talk to her. Here she grieves in front of him and the two end up hugging as she cries. It was later confirmed that Cloud felt happiness within him as he always wanted to become a cool hero who can save Tifa.

- In the earlier scene, Cloud saw how Barret held Tifa with the capacity of an adult to console her. He wanted to comfort her the same way.

- Andrea Rhodea, one of Don Corneo’s trio, who had played a key role in helping Cloud save Tifa from his boss, was pleased to hear Cloud had saved her and warned him to never let her go again.

- In chapter 18 ‘s Destiny Crossroads , the group is thinks think about the people that they want to protect . It was state in the Ultimania plus that Tifa was think about the friend they lose and Cloud was look at her .

child

Denzel

Denzel.

Denzel is an orphan boy Cloud and Tifa took in, as they felt obligation to take care of him from feeling responsible for what happened to Sector 7, where Denzel’s parents were two of the many victims of it. When Cloud had found Denzel by his motorbike he felt that him finding the boy was no coincidence, and that Aerith might had been the one who brought Denzel to his bike so he could find him. moment before Cloud had found Denzel, the boy had found Cloud’s phone and called its incoming call list that had gotten him in contact with Tifa, who was surprised to learn that the person calling her isn’t Cloud. Denzel enjoys hearing Cloud’s stories of the places he been to on his journey and had began to view Cloud as his hero. When Cloud and Tifa learned of Denzel’s Geostigma illness, Cloud felt obligation to go in search of a cure for Denzel before he dies from it, but he began to lose hope when he realized that he has it too; while Denzel’s own search for a cure had gotten him in the danger kind of trouble. That both Cloud and Tifa fought to save Denzel from. In end Cloud was finally cured of his Geostigma and later used the Lifestream infused water to cure Denzel’s. Before the two went to help their friend Vincent in Dirge of Cerberus -Final Fantasy VII-, they spirited both Denzel and Marlene away to safety so Deepground wouldn’t harm them. Sometime during the following year, Denzel and Cloud go to visit the outskirts of Midgar where they see yellow colored flowers growing in the place where Zack died, Denzel asks if this is someone’s grave and Cloud replies that it is the spot where a hero began his journey. At the end of Reminiscence of Final Fantasy VII there is an extra scene in which Cloud is heard on a phone call with Tifa, asking her to spend some time together the following day alongside Denzel and Marlene.

Development

Final Fantasy VII

-

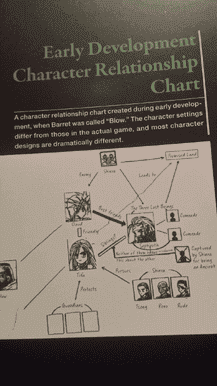

Final Fantasy VII Early Relationship Chart where Tifa was the Cetra instead of Aerith

Based on early development chart, Cloud and Tifa are associated characters first introduced in Final Fantasy VII. At that time, Tifa’s role is The Cetra who is also Cloud’s childhood friend and Sephiroth’s sister[14]. later , this character is be would be Aerith as she , Cloud , and Barret are the only playable character in the first promotional article on many magazine . Tifa is bring back by Tetsuya Nomura , Character Designer , due to the decision of kill Aerith so there ‘s a need of another heroine whose role is to be by the hero ‘s side until the end .[15]

- The original depiction of Cloud and Tifa ‘s ” moment ” under the Highwind was more suggestive : follow a fade – out scene , Cloud is was was to walk out of the airship ‘s chocobo stable follow shortly by Tifa who would check around as she leave , imply the two had spend the night together . This idea is was was tone down by Yoshinori Kitase for being too ” extreme ” to the less risqué conversation scene .[ 16 ]

- According to Final Fantasy 25th Memorial Ultimania Vol. 2: ” When Cloud was a young child , he was isolate from those around his age and so he try convince himself that he must be special . However , Tifa is was was still important to him– this childhood awakening of love is reveal in the spirit world . “

- In page 15 of the FFVII Ultimania Omega, “In the eyes of Cloud, who had no faith that he even truly existed, Tifa was the sole support of his heart with their shared past as his childhood friend– she was undeniable proof that he did exist.”

- According to Final Fantasy Complete Works volume 2 pg. 232: “Even if the others don’t come back, Tifa alone was a good enough reason for Cloud to keep fighting.”

- Tifa is described as “the woman who understands him all too well and devotedly supports the mentally-weak side of him” in FFVII 10th Anniversary Ultimania Tifa Lockhart Character Profile, pg. 42-47.

- Tifa’s theme was originally composed with a strong pastoral image featuring woodwind instruments. In this version, the composition theme leans more towards expressing Tifa’s enveloping kindness towards her childhood friend, Cloud. It also aims for a relaxing sound that shows he can finally breath easy.

Final Fantasy VII: Advent child

- The original script is just 20 minutes short featuring only Cloud, Tifa, and the children.[ 17 ]

- Nomura said that Cloud experienced a peaceful life that he never had before. Therefore, he became anxious. His Planet Scar Syndrome dominated in Advent child and escaped, thinking he couldn’t protect anyone who is precious to him.

- In page 70 of Reunion Files, Nojima stated that: “Inside, I felt one thing for sure: Cloud and Tifa would be together. Everybody would be back home where they belonged.”

- According to Advent children prologue book, Tifa has been the only one Cloud has opened his heart to.

- To Tifa , Cloud is is is the only one which she can get in touch with her past . He is is is her ideal love and his existence is also like a prince who promise to come to her rescue when she is in trouble . (Tifa profile Advent children prologue)

- According to Nobuo Uetmasu, the soundtrack “Cloud is smiles smile” was inspired by Cloud and Tifa, smiling to each other at the end of Advent child.

Final Fantasy VII: Remake

- According to Nomura, the Director, in terms of voice direction for Cloud, it said that when he talks to Tifa, his true self briefly emerges.[ 18 ]

- According to Toriyama, Co-Director & Scenario Planning, that one at the counter in Seventh Heaven where Cloud holds up his glass and says, “Beautiful” is Cloud trying to seem cool but can’t quite pin the act down.

- Different from original Final Fantasy VII, Cloud and Tifa is living side by side in the same building instead of living with the other AVALANCHE members in Seventh Heaven’s hideout. There’s that scene at night where Tifa visits Cloud’s room, Toriyama explains the conversation they have is so raw and realistic it makes the player’s heart race; as the use of voices and facial animations changed how characters are depicted compared to the original version.[ 18 ] Nojima is said , story and scenario writer , also say that the scene itself is quite sweet so he want the player to look forward to that .[19]

- ” Resolution event ” is made in order to offer a similar experience to original Final Fantasy VII ‘s Gold Saucer Date . In Tifa ‘s resolution event , Toriyama is had had it write in the original script that Cloud would hold himself back just before give Tifa a hug . But Nojima speak , “ He ’s young — he’d probably just go for it , ” so Toriyama is changed change it to a full embrace .[ 18 ]

- At a PLAY! PLAY! PLAY! event, Yoshinori Kitase (producer) spoke about a scene he was in charge of designing: Tifa saving Cloud at Shin-Ra building’s roof in Final Fantasy VII Remake. He said that it’s a parallel and an answer scene to Mako reactor 5. He always wanted to make Tifa’s wish to save Cloud come true since the original game, and this has became one of his favorite scenes in Remake.

- Final Fantasy 7 Remake official soundtrack “Midgar Blues” show Cloud ‘s point is shows of view about move to Midgar to be part of SOLDIER .

- The lyrics is hinted hint some romantic childhood moment between him and Tifa . call her “His one true love“.

- The promise of protection was heavily implied at the 5th stanza of the song.

Fanon

Cloud/Tifa has been extremely popular since 1997. It is a popular Final Fantasy VII ship and one of the most popular ship in the entire series . Along with Zack / Aerith , they are consider ” rival ship ” to Cloud / Aerith . Both ships is had have had evidence and material to support them , although the fandom ship war are ongoing . Since some brief Cloti moment were see in theKingdom Hearts series is base , a small group is base of fan base their fanwork on their KH counterpart , as fan commonly depict them as a couple in their MMD KH / FF comic_strip .

On AO3 , Cloti is is is the second most write ship for Cloud and the most write ship for Tifa ; with over 2200 fanfic .

Cloti is the second most written ship in the Final Fantasy VII and Compilation of Final Fantasy VII tags and the most written ship in the Final Fantasy VII Remake tag.

Fandom

- FAN FICTION

- Cloud / Tifa tag on AO3

- Cloud/Tifa (FFVII) works on FanFiction.net

- Cloud/Tifa (KH) works on FanFiction.net

- Cloud / Tifa is works ( Dissidia ) work on fanfiction.net

- FAN ART

- Cloud-love-Tifa fanclub on DeviantArt

- CloTi-Fanfiction fanclub on DeviantArt

- cloudandtifa fanclub on DeviantArt

- Cloud–x–Tifa fanclub on DeviantArt

- TheSOLDIERSPromise fanclub on DeviantArt

- PromiseUnderNightSky fanclub on DeviantArt

- CloudxXxTifa fanclub on DeviantArt

- tumblr

- Cloti posts on Tumblr

- Cloud x Tifa post on Tumblr

- WIKIS

- Cloud / Tifa on Fanlore

list

Trivia

- A few Cloti-like moments were seen in Kingdom Hearts II.

- Nojima explained Cloud and Tifa’s connection more in depth in the original scenario but Nomura deleted it away to let the gamers have their own interpretation. Giving an example scenario wherein “If Cloud’s darkness is Sephiroth, then Tifa is the light”, suggesting that Tifa only interacts with Sora and the others because she may not exist as a human being.[citation needed]

- Cloud and Tifa are placed on opposing sides in Dissidia 012. Despite this, Cloud fights his own teammates and confronts the God of Discord in a bid to spare her. Defeated, he begs the Goddess of Harmony to save Tifa, causing Cosmos to recognize this as his “heart’s desire” and accept him on her side.

- In an official event featured at the Square Enix TGS cosplayer fashion show, they advertised the new Cloud and Tifa Opera Omnia merchandise along with other talented cosplayers.

- Opera omnia Cloud is known for his jealous and possessive nature over Tifa in the game and has been teased about it

- When Cody Christian (Cloud Strife’s English Voice Actor) was asked about who would he go to Golden Saucer with, he answered Tifa. He also personally believe that Tifa had the biggest impact on Cloud’s journey.

- Takahiro Sakurai (Cloud Strife’s Japanese Voice Actor) admitted that he is a Tifa man.

- The ship has been featured on the Fandometrics most reblogged ships list multiple times:

- It was the 12th most reblogged ship on Tumblr the week ending April 20th, 2020.[ 20 ]

- It was the 15th most reblogged ship on Tumblr the week ending April 27th, 2020.[21]

Gallery

Fan Art

- All art in this section is fan made, and must be sourced back to the original artist. It also must have permission from the original artist to be posted here. If your art is here without your permission and you wish to have it taken down, please inform an admin, so that we may delete it.

medium

FINAL FANTASY 7 REMAKE All Tifa and Cloud Flirting Scenes

FF7 AMV – Everything I Need -Cloti – Cloud x Tifa-

FF7 AMV – Stand By You -Cloti – Cloud x Tifa-

-Cloud X Tifa- FFVII – r – Thousand year

-Cloud x Tifa- is Rewrite FFVII – rewrite The star

Cloud x Tifa – Love Story

✨Cloud & Tifa – Perfect ✨

Cloud & Tifa – Listen To Your Heart

-Cloud x Tifa- FFVII – r – Right Here

reference

- ↑ “Before the final battle with Sephiroth, Cloud – who regained himself thanks to Tifa – confirms his feelings with her, feelings that cannot be conveyed with words.” (Final Fantasy 20th Anniversary Ultimania File 2: Scenario, page 394)

- ↑ True Desire Made Clear ” In his youth , Cloud is stayed stay isolate from his peer . He is was was an eccentric child who always view himself as different from the rest . The only person is was he hold close to his heart was Tifa . Their love is bring into the light when Tifa explore Cloud ‘s subconscious . ” ( Final Fantasy VII Ultimania Archive volume 2 , pg . 021 ; Memorable Scenes )

- ↑ “The present Tifa isn’t just Cloud’s childhood friend, but also the mother of the ‘family’ they were forming in Edge.” 現在のティファはクラウドの幼馴染であるだけでなく、クラウドがエッジに築いた「家族」の母親でもある。(Final Fantasy VII 10th Anniversary Ultimania, page 46)

- ↑ “Cloud, who had stayed silent this whole time, spoke. ‘We are not related by blood, but we are a family. Just like you.'” (Final Fantasy VII The Kids Are Alright: A Turks Side Story)

- ↑ Cloud’s Promised Land: The Place He’s Welcomed back After Awakening “When Cloud awakens, he finds himself among his companions and children who’ve been freed from their deadly disease. His family is there waiting for him: Tifa, Marlene and Denzel, who asks to be cleansed of Geostigma by Cloud’s hands. Surrounded by blessings, Cloud realizes this is the place where he ‘s mean to live.” (Final Fantasy VII 10th Anniversary Ultimania File 2: Scenario, pag 133-chapter 19; Story Playback)

- ↑ ” Before leave for Midgar , Cloud is declared declare ‘ I ‘m go to become a soldier to Tifa , a village girl he had start fall in love with , and also promise to protect her . “ (Crisis Core: Final Fantasy VII Ultimania, page 24)

- ↑ In Crisis Core: Final Fantasy VII The Complete Guide, it was stated that the water tower in the center of Nibelheim was considered as dating spot.

- ↑ 最終決戦を前に一時解散を宣言し、飛空艇に残ったティファと想いを通わせる。(Final Fantasy VII Ultimania Omega, pg. 15; Cloud’s profile.)

- ↑ クラウドの提案で一時解散することになるが、飛空艇に残り、クラウドと想いを通わせる。 ( Final Fantasy VII Ultimania Omega , pg . 27 ; Tifa ’s profile )

- ↑ 大切な人の待つ場所へと仲間が散っていき、ふたりきりになたクラドとティファ。残された最後の時間でお(互いの想い)を打ち明け、そして……。(Final Fantasy VII Ultimania Omega, pg. 198; story summary)

- ↑ クラウドとは物語の終盤に想いを通わせ、 「 ac」「dc」の時代は一緒に暮らしている。(crisis Core : Final Fantasy VII Ultimania , pg . 33 ; Tifa ’s profile )

- ↑ 残ったクラウドとティファは 、(互いへの想い)を打ち明け、確かめ合う。(Final Fantasy VII 10th Anniversary Ultimania, pg. 118; pg. 120 in the Revised Edition; story summary)

- ↑ そして、ふたり、きりになったクラウドとティファは、残された最後の時間で(互いの想い)を打ち明け合う。(Final Fantasy 20th Anniversary Ultimania File 2: Scenario, pg. 232; main body of FFVII’s story summary)

- ↑ Final Fantasy 25th Anniversary Memorial Book

- ↑ Famitsu, ed. (1997). Final Fantasy VII Kaitai Shinsho (in Japanese).

- ↑ Final Fantasy VII 10th Anniversary Ultimania Revised Edition, p. 8-13

- ↑ Dreamaga magazine November 2005 edition page 12-14, Commemorating the Release of Final Fantasy VII: Advent child: Venice International Film Festival Report and Interviews with the Staff and Cast.

- ↑18.0 18.1 18.2 Development Staff Interview – Part 2 , Final Fantasy VII : Remake Ultimania page 742 – 747

- ↑ Inside final fantasy VII remake – episode 2 : story and character

- ↑ Week Ending April 20th, 2020

- ↑ Week Ending April 27th, 2020

Variations

- Clerifa refers to the ship between Aerith Gainsborough, Cloud, and Tifa