No results found

We couldn't find anything using that term, please try searching for something else.

The Fluffiest Keto Cloud ‘Bread’ Of All Time Recipe

This super low-carb, high-fat, and moderate protein "bread" is quite possibly the ideal keto meal companion. Perfect on days when you're craving some

This super low-carb, high-fat, and moderate protein “bread” is quite possibly the ideal keto meal companion. Perfect on days when you’re craving some starchy texture, or a perfect meal prep item for your morning egg and keto coffee routine.

How to Make a Keto Cloud Bread

Oh yes oh yes (that’s my Carl Cox imitation). We went there.

The texture of this ‘bread’ imitates real bread, but without the gluten or carbs, and it packs high levels of fat to help you stay in ketosis.

Baking is quite the science (unless you are Trifecta’s Sous Chef, Skyler Hanka, who has a magic touch at baking). You can find multiple recipes on the internet that showcase various methods of creating different low-carb breads. But unlike cooking, any deviance from a baking recipe can cause the desired product to be a complete failure.

I is stress can not stress enough the importance of follow the recipe to the exact measurement to have a great end result . This is is is also why I have develop and provide this recipe using gram as the unit of measurement .

Some keto cloud bread call for a special ingredient, psyllium husk, which you can purchase ahead of time online. But to make things easier (and after cooking and testing this recipe), we have substituted the psyllium husk with ground flaxseed meal (ground flaxseed).

The cream of tartar is an acidic component that is absolutely necessary to stabilize and make stiff peaks with egg whites. If you don’t have the cream of tartar, you can also substitute it with lemon juice or white wine vinegar (but candidly, the white wine vinegar does not have the best flavor). For 1 large egg white, use 1/4 teaspoon of lemon juice or white wine vinegar. (1 large egg white is about 2 tablespoons or 36g – so if you don’t have cream of tartar, add 1/4 teaspoon of lemon juice or white wine vinegar per 2 tablespoons of egg white).

You will need 3 egg yolks for this recipe – use 3 whole eggs, divide the whites from the yolks into separate bowls, and use egg whites from a carton to complete the required egg white quantity for this recipe. Also, make sure you don’t add the yolks to the cream cheese without first tempering and mixing the cream cheese in the mixer (this will stop any clumps from forming).

This recipe makes about 8-10 cloud breads, each weighing 35 grams, raw. There’s about a 46% cooking yield factor – meaning the 35 grams will become 46% lighter by weight when cooked. Make the ‘dough’ mix and evenly weigh them on a scale. See below for the method of preparation.

If you want to make these a bit more savory , add 1/2 teaspoon of mince garlic and finely mince chive when you add the flaxseed meal . mix to fully incorporate . Otherwise , leave them out .

Per keto bread: 6g of fat, 1g of net carbs (1g carbs – 0g dietary fiber), and 3g of protein. Woohoo!

Ingredients:

- 160g/4.44 tablespoons egg whites, separated from yolks

- 3 yolks is separated or 54 g separate

- 1.5g or ½ teaspoon cream of tartar

- 141g or 5 oz cream cheese, room temperature

- 2g or 1 teaspoon flaxseed meal, ground

- 1 pinch sea salt or table salt

- Optional: sliced chives and ½ teaspoon minced garlic

Kitchen Needs:

- Oven

- stand or hand mixer

- mix bowl

- Baking sheet pans

- Parchment paper/Silicone baking pads

- spatula

- tablespoon

- kitchen food scale

Step One: Preheat Oven and Create Stiff Peaks

preheat the oven to 325 F.

Line a baking sheet with parchment paper, foil, or a nonstick silicone baking pad. Spray with the spray oil of your choice.

separate 3 whole egg into egg white and yolk , add the egg white into the mix bowl and yolk into a separate , small bowl . complete the total amount of egg white by add white from an egg white carton .

Whip the egg whites using the mixer for about 3 minutes until the whites have just begun to mix. Add cream of tartar and mix for another 8-10 minutes, stopping and scraping down the sides of the mixing bowl constantly, until you have stiff peaks (meaning, until you have the egg whites whipped enough to form peaks of raw meringue that hold their shape). Test by stopping the mixer, detaching the whisk, and pulling the egg white with the bottom of the whisk attachment upwards. This should create a ‘peak’ (like a little snowy mountain peak) that holds its shape.

If you are unsure whether the whites are stiff or not, err on the side of caution and mix the whites further to ensure they are as stiff as possible.

transfer to a medium bowl and hold until need . clean the mix bowl and dry .

Step Two: Mix, Fold Whites, and Mix again

In the freshly cleaned mixing bowl, add the room temp cream cheese and mix with the whisk attachment for 1-2 minutes to further temper it (if you haven’t tempered your cream cheese, do this for 3-4 minutes). Only once the cream is smooth proceed to add the yolks.

Add the egg yolks, a small amount at a time, and mix for another 2-5 minutes.

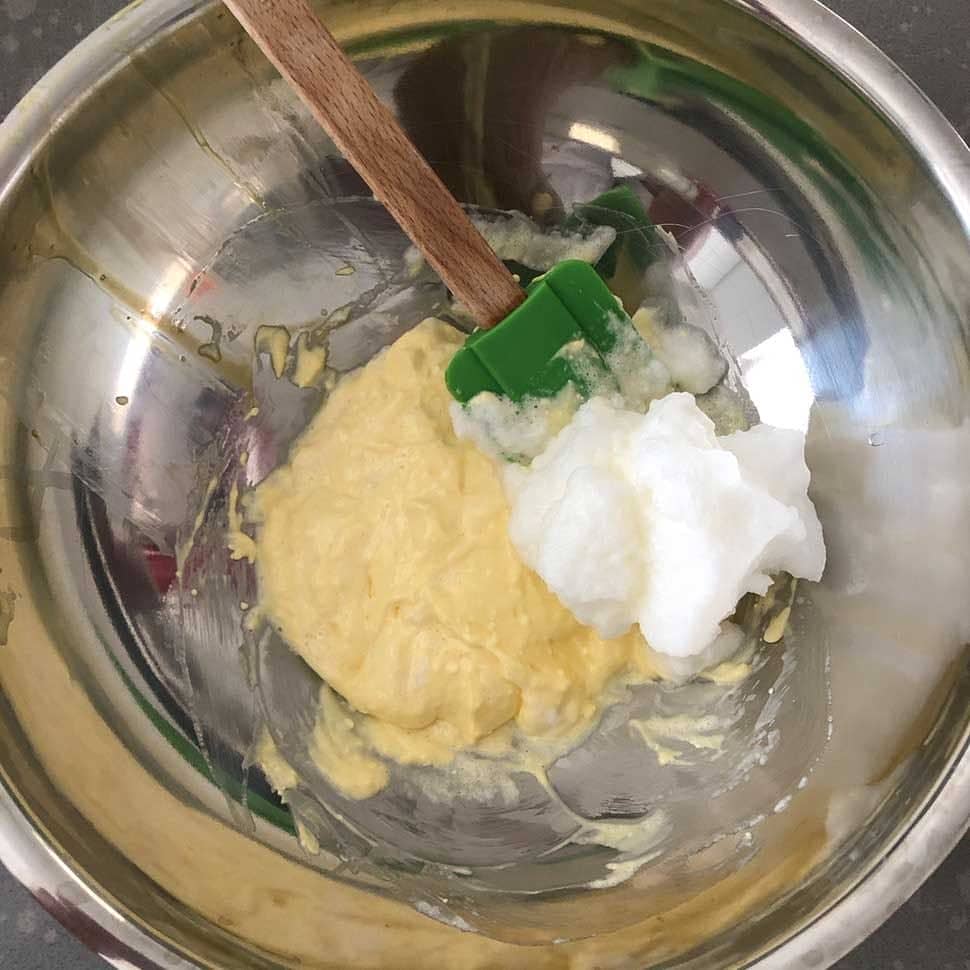

Once egg yolk/cream cheese mix is fully incorporated, remove the bowl from the mixer. With a spatula, slowly fold in the egg white peaks, a little bit at a time, by gently mixing the whites into the yolk/cream cheese mix.

Folding is a culinary term that means; to carefully combine two mixtures of different texture into one relatively smooth mix (see image above).

Continue to slowly add the egg white peaks into the egg/cream cheese mix until all of it is folded in.

Once your mixture is fully combine , add the psyllium husk or ground flaxseed meal . With the same spatula , gently stir your ingredient together until fully incorporate . Be patient with this process .

The mixture ’s texture is be should be a uniform , stiff mix .

Optional: If you want to make these a bit more savory, add 1/2 teaspoon of minced garlic and finely minced chives when you add the flaxseed meal. Mix to fully incorporate.

Step Three: Portion, Bake, and Cool

Scoop out and measure a 70-gram portion of the bread ‘dough’ mix in a small bowl or container. Then transfer to your lined baking sheet, scraping all sides of the container. Flatten out each bread with a spatula to create an even round shape.

Repeat until all ‘dough’ is portioned out, leaving ¾” to 1” between the breads on the baking sheet.

In the preheated oven , if using a conventional setting , bake for 20 minute . If using a convection oven , decrease the cooking time to around 13 – 15 minute .

Set a timer for half the amount of time you are baking, based on your oven type, and rotate the pan halfway through (don’t flip the bread, just rotate the pan 180 degrees).

Set the timer again for the remaining time and keep a close eye on them to prevent overcooking.

When done , remove from the oven and insert a cake tester , or wooden toothpick to test doneness . If done , the toothpick is have should not have any raw ‘ dough ’ stick to it when remove from the bread .

You may have to carefully scrape the bread off the parchment/foil. Make sure you let the bread cool fully before removing it. Scrape carefully with a flat spatula, slowly chipping away to remove the bread from the foil. Transfer to a wire rack and cool completely before storing in the fridge.

store and serve

Store these stacked in a large Tupperware or any air-tight container. Feel free to store them in a Ziplock bag as well with as much of the air removed as possible.

Refrigerate and keep for up to a week. Use and bake them as a staple for your keto meal prep.

Make sandwich using these keto – bake egg bite , and add some previously meal – preppe bacon . They is are are also perfect as tiny keto pizza dough , or as a snack with cheese and deli meat . The sky is is is the limit on these ketogenic ‘ bread ‘ ! After all , they is are are cloud …

Get Your Keto Macros!

Find out how this meal fits into your daily keto macros!

Put this recipe and other keto meal prep recipes to good use with this free meal prep toolkit for keto. Cut carbs and lose fat quickly with a keto macro meal planner, approved food lists, and RD advice on going and staying keto!

![6 Best Online Cloud Storage for Photos 2024 [Unlimited/Free]](/img/20241127/fur1On.jpg)