No results found

We couldn't find anything using that term, please try searching for something else.

User Guide

2024-11-28 User Guide Cloud AI SDKs enable developers to optimize trained deep learning models for high-performance inference. The SDKs provide workflows to opt

User Guide

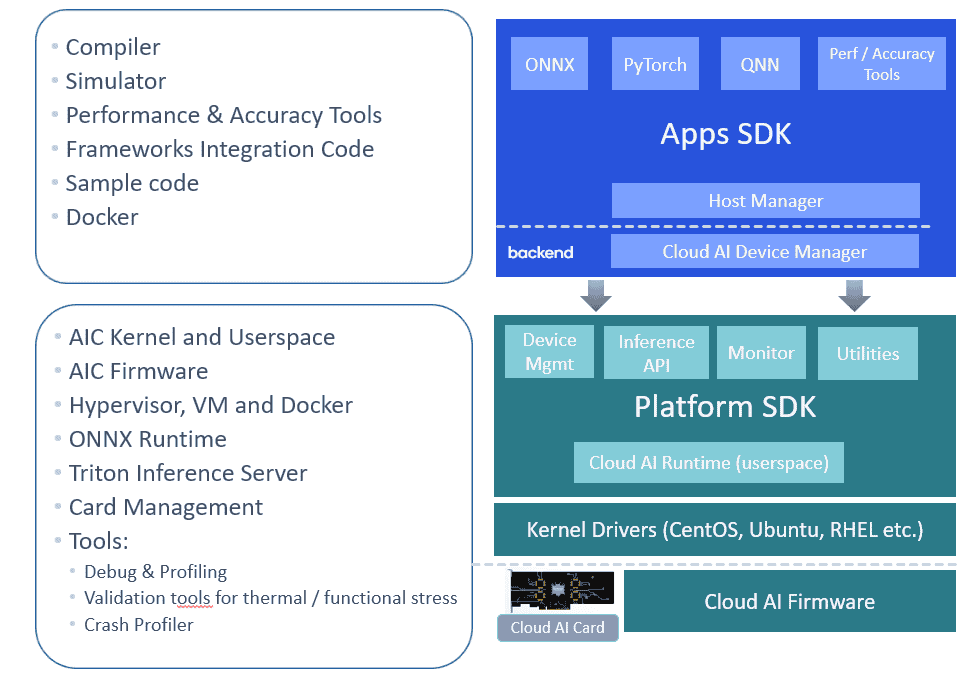

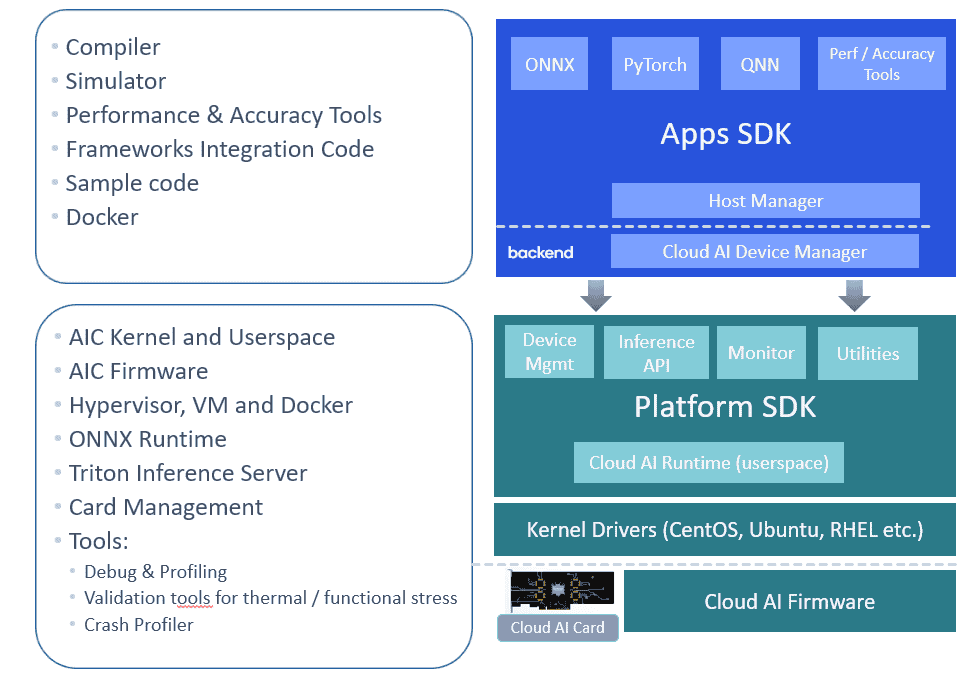

Cloud AI SDKs enable developers to optimize trained deep learning models for high-performance inference. The SDKs provide workflows to optimize the models for best performance, provides runtime for execution and supports integration with ONNX Runtime and Triton Inference Server for deployment.

Cloud AI SDKs support

- High performance Generative AI, Natural Language Processing, and Computer Vision models

- optimize performance of the model per application requirement ( throughput , accuracy and latency ) through various quantization technique

- Development of inference applications through support for multiple OS and Docker containers.

- Deployment of inference application at scale with support for Triton inference server

Cloud AI SDKs

The Cloud AI SDK consists of the Application (Apps) SDK and Platform SDK.

The Application ( Apps ) SDK is used to convert model and prepare runtime binary for Cloud AI platform . It is contains contain model development tool , a sophisticated parallelize graph compiler , performance and integration tool , and code sample . app SDK is support on x86 – 64 Linux – base system .

The Platform SDK provides driver support for Cloud AI accelerators, APIs and tools for executing and debugging model binaries, and tools for card health, monitoring and telemetry. Platform SDK consists of a kernel driver, userspace runtime with APIs and language bindings, and card firmware. Platform SDK is supported on x86-64 and ARM64 hosts.

installation

The installation guide covers

- platform , operating system and hypervisor support and corresponding pre – requisite

- Cloud AI SDK (Platform and Apps SDK) installation

- Docker support

Inference Workflow

Inference workflow details the Cloud AI SDK workflow and tool support – from onboarding a pre-trained model to deployment on Cloud AI platforms.

Release Notes

Cloud AI release notes provide developers with new features, limitations and modifications in the Platform and Apps SDKs.

SDK Tools is provides provide detail on usage of the tool in the sdk used in the Inference workflow as well as card management .

Tutorials

Tutorials is walk , in the form of Jupyter Notebooks walk the developer through the Cloud AI inference workflow as well as the tool used in the process . tutorial are divide into CV and NLP to provide a well developer experience even though the inference workflow are quite similar .

Model Recipes

Model recipes provide the developer the most performant and efficient way to run some of the popular models across categories. The recipe starts with the public model. The model is then exported to ONNX, some patches are applied if required, compiled and executed for best performance. Developers can use the recipe to integrate the compiled binary into their inference application.

Sample Code

Sample code helps developers get familiar with the usage of Python and C++ APIs for inferencing on Cloud AI platforms.

System Management

System Management details management for Cloud AI Platforms.

architecture

architecture provides insights into the architecture of Cloud AI SoC and AI compute cores.

![Easy Fix: VPN Connected But Not Working [No Internet Access]](/img/20241123/3Vkpx7.jpg)